Background

According to a study led by researchers at the University of California, Berkeley the passengers loose $16 billion a year due to delayed flights -- learn more. According to the data I analyzed 16 % of flights within the USA were delayed in 2016. It is of high interest to give a reliable flight ontime prediction which is not including factors which are unknown at the time of booking, therefore the weather should not be taken into account.Summary

I studied flight ontime performance data which is accessible at the Bureau of Transportation Statistics. Based on all 5.5 million domestic flights from 2016 I generated a flight delay prediction model for flights within the USA. The size of the analyzed dataset is 600 MB.An application runs on heroku with a web interface ONTIMEPREDICTOR and the code can be found on github thedatabeat. The code for preprocessing, analysis and evaluation of the data is located at this repository .

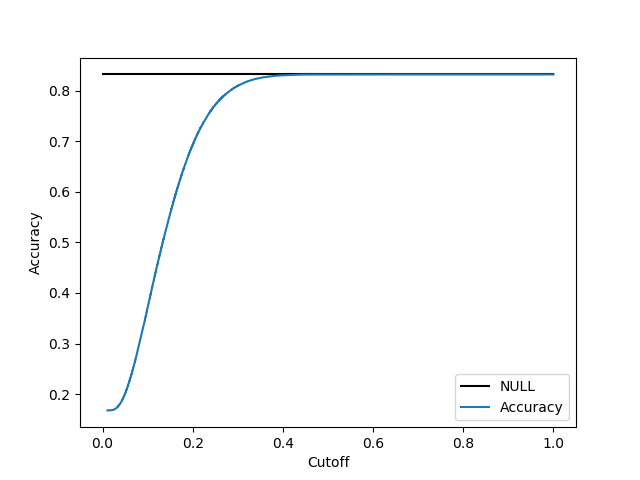

The dataset has 83% ontime flights, therefore if one predicts every flight ontime the

percentage of correctly predicted ontime flights is 83%.

In the model provided, the percentage of correctly predicted ontime flights is 89%.

The area under curve (AUC) score is 0.67.

After testing various machine learning algorithms such as logistic regression, support vector machines, random forest, neural networks, AdaBoost and gradient boosting, I choose logistic regression to generate the model. The other more sophisticated models did not generate better results due to insufficient structure in the data therefore I applied Occam's razor.

All the analysis is made on python using various libraries such as pandas and sklearn.

Prior Analysis

The features I took into account were MONTH, DAY_OF_MONTH, DAY_OF_WEEK, CARRIER, ORIGIN, DESTINATION, and CRS_DEPARTURE_TIME.First I studied the significance of each feature. I did this in the mindset of a 2-sided t-test. This went as follows: Fix a feature. Compute for several splits of the dataset (approximately 500 splits) the ratio of delayed flights for each value of this feature. Let's name this quantity Q , which depends on splits and the feature values. Then compute for each feature value the mean and standard deviation of Q . For each pair of feature values compute then the ratio of difference between the mean values and an average standard deviation and multiply it by the square root of the number of splits. This measures the significance between pairs of feature values, from this numbers maximize over the pairs of feature values. This is big if the feature is significant and small otherwise.

From this analysis came out that some features are more significant than others. But the machine learning algorithms performed best when all features were fed.

To get numerical values for each feature I introduced dummy variables. The CRS_DEPARTURE_TIME was treated categorical by splitting it into one hour blocks.

Logistic Regression

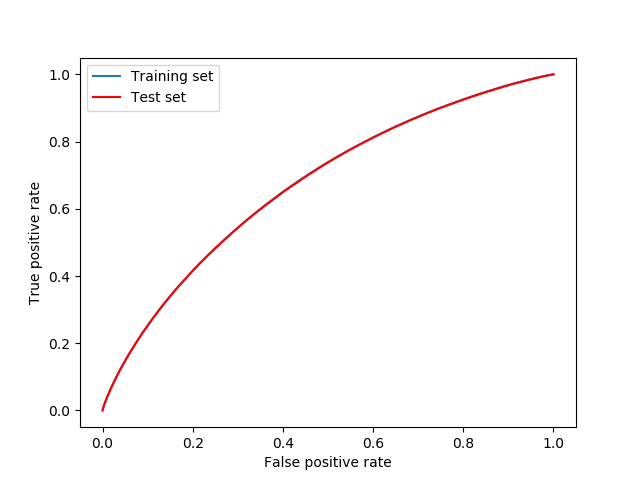

I trained a logistic regression model on 60% of the data and cross validated it on the remaining 40%. The parameters were chosen in a way that best results were obtained. Note that the label positive means delayed and the label negative means ontime. The first plot is the receiver operating curve, the area under this curve is the AUC-score which is 0.67. One can see that the curve is the same on the training and the test set which indicates

that the model is not overfitted.

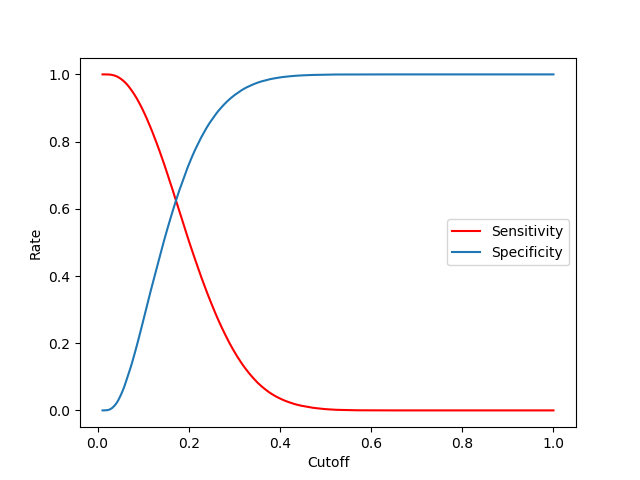

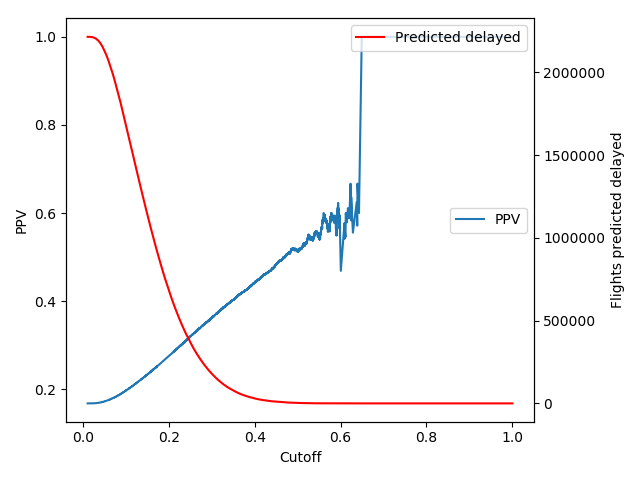

I choose the prediction cutoff to be 0.17.

This is the point were

sensitivity=

(flights predicded delayed and delayed)/(delayed flights)

specificity = (predicded ontime and ontime)/(ontime flights)

are of equal size.

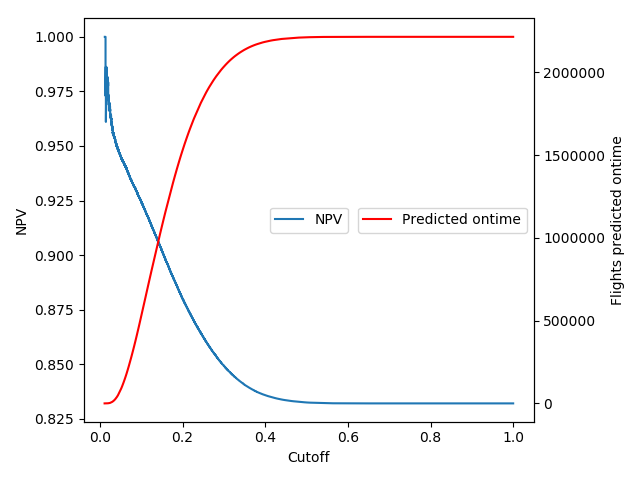

The negative prediction value = (predicded ontime and ontime)/(predicted ontime)

is the quantiy I mentioned in the summary. If this value is around 0.9 we classify a bit less than half of the flights ontime.

The positive prediction value = (predicted delayed and delayed)/(predicted delayed)

is low. It says how reliable is a delayed prediction. If one predicts all flights delayed then this prediction is right with probability

0.16. With the model I can reach a probability of 0.3 when I predict about 25 % of the flights delayed.

As the next plot shows I did not aim to maximize the accuracy by choosing the cutoff to be 0.17. I wanted to optimize

the sensitivity and specificity.